Iclr2020: Compression based bound for non-compressed network: unified generalization error analysis of large compressible deep neural network - Download as a PDF or view online for free

1) The document presents a new compression-based bound for analyzing the generalization error of large deep neural networks, even when the networks are not explicitly compressed.

2) It shows that if a trained network's weights and covariance matrices exhibit low-rank properties, then the network has a small intrinsic dimensionality and can be efficiently compressed.

3) This allows deriving a tighter generalization bound than existing approaches, providing insight into why overparameterized networks generalize well despite having more parameters than training examples.

Iclr2020: Compression based bound for non-compressed network: unified generalization error analysis of large compressible deep neural network

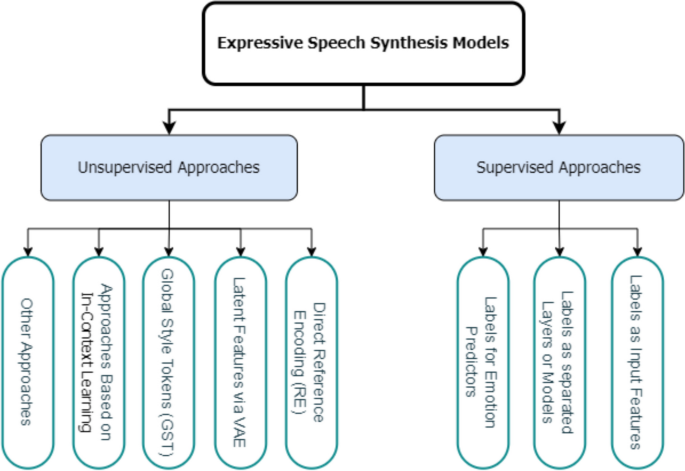

Deep learning-based expressive speech synthesis: a systematic review of approaches, challenges, and resources, EURASIP Journal on Audio, Speech, and Music Processing

How does unlabeled data improve generalization in self training

Jokyokai2

Emergence of Invariance and Disentangling in Deep Representations

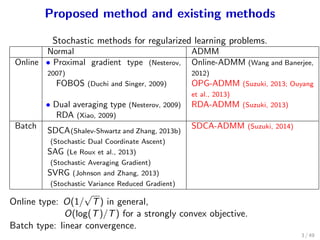

Stochastic Alternating Direction Method of Multipliers

Non-IID Distributed Learning with Optimal Mixture Weights

ICLR 2020

ICLR 2020

ICLR2021 (spotlight)] Benefit of deep learning with non-convex noisy gradient descent

Meta Learning Low Rank Covariance Factors for Energy-Based Deterministic Uncertainty